Agreed. But we need a solution against bots just as much. There’s no way the majority of comments in the near future won’t just be LLMs.

Closed instances with vetted members, there’s no other way.

Too high of a barrier to entry is doomed to fail.

Programming.dev does this and is the tenth largest instance.

Techy people are a lot more likely to jump through a couple of hoops for something better, compared to your average Joe who isn’t even aware of the problem

Techy people are a lot more likely to jump through hoops because that knowledge/experience makes it easier for them, they understand it’s worthwhile or because it’s fun. If software can be made easier for non-techy people and there’s no downsides then of course that aught to be done.

Ok, now tell the linux people this.

It’s not always obvious or easy to make what non-techies will find easy. Changes could unintentionally make the experience worse for long-time users.

I know people don’t want to hear it but can we expect non-techies to meet techies half way by leveling their tech skill tree a bit?

Yeah that was kinda my point

I started using Twitter in 2009. It was just techy people back then. Things are allowed to take time and grow organically.

10th largest instance being like 10k users… we’re talking about the need for a solution to help pull the literal billions of users from mainstream social media

There isn’t a solution. People don’t want to pay for something that costs huge resources. So their attention becoming the product that’s sold is inevitable. They also want to doomscroll slop; it’s mindless and mildly entertaining. The same way tabloid newspapers were massively popular before the internet and gossip mags exist despite being utter horseshite. It’s what people want. Truly fighting it would requires huge benevolent resources, a group willing to finance a manipulative and compelling experience and then not exploit it for ad dollars, push educational things instead or something. Facebook, twitter etc are enshitified but they still cost huge amounts to run. And for all their faults at least they’re a single point where illegal material can be tackled. There isn’t a proper corollary for this in decentralised solutions once things scale up. It’s better that free, decentralised services stay small so they can stay under the radar of bots and bad actors. When things do get bigger then gated communities probably are the way to go. Perhaps until there’s a social media not-for-profit that’s trusted to manage identity, that people don’t mind contributing costs to. But that’s a huge undertaking. One day hopefully…

They also want to doomscroll slop; it’s mindless and mildly entertaining. The same way tabloid newspapers were massively popular before the internet and gossip mags exist despite being utter horseshite. It’s what people want.

The same analogy is applicable to food.

People want to eat fastfood because it’s tasty, easily available and cheap. Healthy food is hard to come by, needs time to prepare and might not always be tasty. We have the concepts of nutrition taught at school and people still want to eat fast-food. We have to do the same thing about social/internet literacy at school and I’m not sure whether that will be enough.

So we need a documentary like Super Size Me but for social media. I think post that documentary coming out was the only time I’ve seen people’s attitudes change in the general population about fast food.

We have a human vetted application process too and that’s why there’s rarely any bots or spam accounts originating from our instance. I imagine it’s a similar situation for programming.dev. It’s just not worth the tradeoff to have completely open signups imo. The last thing lemmy needs is a massive influx of Meta users from threads, facebook or instagram, or from shitter. Slow, organic growth is completely fine when you don’t have shareholders and investors to answer to.

The bar is not particularly high with lemmy and that is a focused community.

People aren’t (generally) being made aware of the injustice on the other side of the planet while they are asking a question about C#.

Yeah but people ARE (generally) being made aware about Linux while they are asking a question about the injustice on the other side of the planet. You’re welcome Lemmy!

/s, also I do not officially represent the instance in any capacity, lol

It’s how most large forums ran back in the day and it worked great. Quality over quantity.

@a1studmuffin @ceenote the only reason these massive Web 2.0 platforms achieved such dominance is because they got huge before governments understood what was happening and then claimed they were too big to follow basic publishing law or properly vet content/posters. So those laws were changed to give them their own special carve-outs. We’re not mentally equipped for social networks this huge.

I disagree, I think we’re built for social networks that huge. The problems happen when money comes into the equation. If we lived in a world without price tags, and resources went where they needed to go instead of to who has the most money, and we were free to experiment with new lifestyles and ideas, we would thrive with a huge and diverse social network. Money is like a religious mind-virus that triggers psycopathy and narcissism in human beings by design, yet we believe in it like it’s a force of nature like God or something. A new enlightenment is happening all thanks to huge social networks allowing us to express our nature, it’s the institutions of control that aren’t equipped to handle such breakdown of social barriers (like the printing press protestant revolution, or the indigenous critiques before the enlightenment period)

I dunno man. Discord has thousands of closed servers that are doing great.

If we’re talking about breaking tech oligarchs hold on social media, no closed server anywhere comes close as a replacement to meta or Twitter.

We’re talking about the need for a system to deal with major access of a main facebook/insta/twitter etc… to a majority of people.

IE of the scale that someone can go “Hey I bet my aunt that I haven’t talked to in 15 years might be on here, let me check”. Not a common occourance in a closed off discord community.

Also, noting that doesn’t fully solve the primary problem… of still being at the whims and controls of a single point of failure. of which if Discord Inc could at any point in time decide to spy on closed rooms, censor any content they dislike etc…

I question if we really need spaces like that anymore. But I see where you are coming from.

I was definitely only thinking about social places like Lemmy and Discord. Not networking places like Facebook and LinkedIn.

It really feels like there are zero solutions available. I’m at a point where I realize that all social networks have major negative impacts on society. And I can’t imagine anything fixing it that isn’t going back to smaller, local, and private. Maybe we don’t need places where you can expect everyone to be there.

When we can expect everyone on the planet to be present in a network the conflict and vitrol would be perpetual. We are not mature enough and all on the same page enough as a species to not resort to mud slinging

Could do something like discord. Rather than communities, you have “micro instances” existing on top of the larger instance, and communities existing within the micro instances. And of course make it so that making micro instances are easier to create.

If you could vet members in any meaningful way, they’d be doing it already.

Most instances are open wide to the public.

A few have registration requirements, but it’s usually something banal like “say I agree in Spanish to prove your Spanish enough for this instance” etc.

This is a choice any instance can make if they want, none are but that doesn’t mean they can’t or it doesn’t work.

I was referring to some of the larger players in the space, ie Meta, Twitter, etc.

Right, but they’re shit and don’t good things out of principle.

We, the Fediverse, are the alternative to them.

Doesn’t matter if they’re shit or not, they don’t want bots crawling their sites, straining their resources, or constantly shit posting, but they do anyway. And if the billion dollar corporations can’t stop them, it’s probably a good bet that you can’t either.

Because they want user data over anything.

We want quality communities over anything.

We can be selective, they go bankrupt without consistent growth.

It could be cool to get a blue check mark for hosting your own domain (excluding the free domains)

It would be more expensive than bot armies are willing to deal with.

Well, what doesn’t work, it seems, is giving (your) access to “anyone”.

Maybe a system where people, I know this will be hard, has to look up outlets themselves, instead of being fed a “stream” dictated by commercial incentives (directly or indirectly).

I’m working on a secure decentralised FOSS network where you can share whatever you want, like websites. Maybe that could be a start.

I think you replied to the wrong comment.

Well no?

What did I miss?

I’m speaking broadly in general terms in the post, about sharing online.

This conversation was about bots. Yours is about “outlets” and “streams”, whatever that is.

If you have some algorithm or few central points distributing information, any information, you’ll get bot problems. If you instead yourself hook up with specific outlets, you won’t have that problem, or if one is bot infested you can switch away from it. That’s hard when everyone is in the same outlet or there are only few big outlets.

Sorry if it’s not clear.

Isn’t that basically the same result though…

Problem with tech oligarchy is it just takes one person to get corrupted and then he blocks out all opinion that attacks his goals.

So the solution is federation, free speech instances that everyone can say whatever they want no matter how unpopular.

How do we counteract the bots…

Well we need the instances to verify who gets in, and make sure the members aren’t bots or saying unpopular things. These instances will need to be big, and well funded.

How do we counter these instance owners getting bought out, corrupted (repeat loop).

No? The problem of tech oligarchy is that they control the systems. Here anyone can start up a new instance at the press of a button. That is the solution, not allowing unfiltered freeze peach garbage.

Small “local” human sized groups are the only way we ensure the humanity of a group. These groups can vouch for each-other just as we do with Fediseer.

One big gatekeeper is not the answer and is exactly the problem we want to get away from.

You counter them by moving to a different instance.

Concept is however that if a new instance is detatched from the old one… then it’s basically the same story of leaving myspace for facebook etc… we go through the long vetting process etc… over and over again, userbase fragments reaching critical mass is a challange every time. I mean yeah if we start with a circle of 10 trusted networks. One goes wrong it defederates, people migrate to one of the 9 or a new one gets brought into the circle. but actual vetting is a difficult process to go with, and makes growing very difficult.

How is it going to be as big as reddit if EVERYONE is vetted?

Why do you want it to be as big as Reddit?

There might be clever ways of doing this: Having volunteers help with the vetting process, allowing a certain number of members per day + a queue and then vetting them along the way…

Can you have an instance that allows viewing other instances, but others can’t see in?

Vetted members could still bot though or have ther accounts compromised. Not a realistic solution.

Instances that don’t vet users sufficiently get defederated for spam. Users then leave for instances that don’t get blocked. If instances are too heavy handed in their moderation then users leave those instances for more open ones and the market of the fediverse will balance itself out to what the users want.

I wish this was the case but the average user is uninformed and can’t be bothered leaving.

Otherwise the bigger service would be lemmy, not reddit.

the market of the fediverse will balance itself out to what the users want.

Just like classical macroeconomics, you make the deadly (false) assumption that users are rational and will make the choice that’s best for them.

The sad truth is that when Reddit blocked 3rd party apps, and the mods revolted, Reddit was able to drive away the most nerdy users and the disloyal moderators. And this made Reddit a more mainstream place that even my sister and her friends know about now.

We could ask for anonymous digital certificates. It works this way.

Many countries already emit digital certificates for it’s citizens. Only one certificate by id. Then anonymous certificates could be made. The anonymous certificate contains enough information to be verificable as valid but not enough to identify the user. Websites could ask for an anonymous certificate for register/login. With the certificate they would validate that it’s an human being while keeping that human being anonymous. The only leaked data would probably be the country of origin as these certificates tend to be authentificated by a national AC.

The only problem I see in this is international adoption outside fully developed countries: many countries not being able to provide this for their citizens, having lower security standards so fraudulent certificates could be made, or a big enough poor population that would gladly sell their certificate for bot farms.

Your last sentence highlights the problem. I can have a bot that posts for me. Also, if an authority is in charge of issuing the certificates then they have an incentive to create some fake ones.

Bots are vastly more useful as the ratio of bots to humans drops.

Also the problem of relying on a nation state to allow these certificates to be issued in the first place. A repressive regime could simply refuse to give its citizens a certificate, which would effectively block them from access to a platform that required them.

we have to use trust from real life. it’s the only thing that centralized entities can’t fake

deleted by creator

I subscribed to the arch gitlab last week and there was a 12 step identification process that was completely ridiculous. It’s clear 99.99% of users will just give up.

I mentioned this in another comment, but we need to somehow move away from free form text. So here’s a super flawed makes-you-think idea to start the conversation:

Suppose you had an alternative kind of Lemmy instance where every post has to include both the post like normal and a “Simple English” summary of your own post. (Like, using only the “ten hundred most common words” Simple English) If your summary doesn’t match your text, that’s bannable. (It’s a hypothetical, just go with me on this.)

Now you have simple text you can search against, use automated moderation tools on, and run scripts against. If there’s a debate, code can follow the conversation and intervene if someone is being dishonest. If lots of users are saying the same thing, their statements can be merged to avoid duplicate effort. If someone is breaking the rules, rule enforcement can be automated.

Ok so obviously this idea as written can never work. (Though I love the idea of brand new users only being allowed to post in Simple English until they are allow-listed, to avoid spam, but that’s a different thing.) But the essence and meaning of a post can be represented in some way. Analyze things automatically with an LLM, make people diagram their sentences like English class, I don’t know.

A bot can do that and do it at scale.

I think we are going to need to reconceptualize the Internet and why we are on here at all.

It already is practically impossible to stop bots and I’m a very short time it’ll be completely impossible.

I think I communicated part of this badly. My intent was to address “what is this speech?” classification, to make moderation scale better. I might have misunderstood you but I think you’re talking about a “who is speaking?” problem. That would be solved by something different.

It sounds like you’re describing doublespeak from 1984.

Simplifying language removes nuance. If you make moderation decisions based on the simple English vs. what the person is actually saying, then you’re policing the simple English more than the nuanced take.

I’ve got a knee-jerk reaction against simplifying language past the point of clarity, and especially automated tools trying to understand it.

I feel like it’s only a matter of time before most people just have AI’s write their posts.

The rest of us with brains, that don’t post our status as if the entire world cares, will likely be here, or some place similar… Screaming into the wind.

deleted by creator

What? I post a lot, but the majority?

…oh, you said LLM. I thought you said LMM.

Also is data scraping as much of an issue?

Data scraping is a logical consequence of being an open protocol, and as such I don’t think it’s worth investing much time in resisting it so long as it’s not impacting instance health. At least while the user experience and basic federation issues are still extant.

Decentralized authentication system that support pseudonymous handles. The authentication system would have optional verification levels.

So I wouldn’t know who you are but I would know that you have verified against some form of id.

The next step would then by attributes one of which is your real name but also country of birth, race, gender, and other non-mutable attributes that can be used but not polled.

So I could post that I am Bob living in Arizona and I was born in Nepal and those would be tagged as verified, but someone couldn’t reverse that and request if I want to post without revealing those bits of data.

We need digital identities, like, yesterday.

Yeah I’m not seeing any way around that sadly. At least for places where you want/need to know the content is from an actual person.

Precisely, and it can stay pseudo-anonymous. A trusted third party (Governments? Banks? A YMCA gym membership?) issuing a hashed certificate or token is all that’s needed. You don’t need to know my name, age, gender: but if you could confirm that I DO have those attributes, and X, Y, and Z parties confirmed it, then it’s likely I’m a human.

I think it would make sense to channel all bots/propaganda into some concentrated channels. Something like https://lemmygrad.ml/u/yogthos where you can just block all propaganda by blocking one account.

There are simple tests to out LLMs, mostly things that will trip up the tokenizers or sampling algorithms (with character counting being the most famous example). I know people hate captchas, but it’s a small price to pay.

Also, while no one really wants to hear this, locally hosted “automod” LLMs could help seek out spam too. Or maybe even a Kobold Hoard type “swarm.”

Captchas don’t do shit and have actually been training for computer vision for probably over a decade at this point.

Also: Any “simple test” is fixed in the next version. It is similar to how people still insist “AI can’t do feet” (much like rob liefeld). That was fixed pretty quick it is just that much of the freeware out there is using very outdated models.

I’m talking text only, and there are some fundamental limitations in the way current and near future LLMs handle certain questions. They don’t “see” characters in inputs, they see words which get tokenized to their own internal vocabulary, hence any questions along the lines of “How many Ms are in Lemmy” is challenging even for advanced, fine tuned models. It’s honestly way better than image captchas.

They can also be tripped up if you simulate a repetition loop. They will either give a incorrect answer to try and continue the loop, or if their sampling is overturned, give incorrect answers avoiding instances where the loop is the correct answer.

They don’t “see” characters in inputs, they see words which get tokenized to their own internal vocabulary, hence any questions along the lines of “How many Ms are in Lemmy” is challenging even for advanced, fine tuned models.

And that is solved just by keeping a non-processed version of the query (or one passed through a different grammar to preserve character counts and typos). It is not a priority because there are no meaningful queries where that matters other than a “gotcha” but you can be sure that will be bolted on if it becomes a problem.

Again, anything this trivial is just a case of a poor training set or an easily bolted on “fix” for something that didn’t have any commercial value outside of getting past simple filters.

Sort of like how we saw captchas go from “type the third letter in the word ‘poop’” to nigh unreadable color blindness tests to just processing computer vision for “self driving” cars.

They can also be tripped up if you simulate a repetition loop.

If you make someone answer multiple questions just to shitpost they are going to go elsewhere. People are terrified of lemmy because there are different instances for crying out loud.

You are also giving people WAY more credit than they deserve.

Well, that’s kind of intuitively true in perpetuity

An effective gate for AI becomes a focus of optimisation

Any effective gate with a motivation to pass will become ineffective after a time, on some level it’s ultimately the classic “gotta be right every time Vs gotta be right once” dichotomy—certainty doesn’t exist.

@NuXCOM_90Percent @brucethemoose would some kind of proof of work help solve this? Ifaik its workingnon tor

Somehow I didn’t get pinged for this?

Anyway proof of work scales horrendously, and spammers will always beat out legitimate users of that even holds. I think Tor is a different situation, where the financial incentives are aligned differently.

But this is not my area of expertise.

Reputation systems. There is tech that solves this but Lemmy won’t like it (blockchain)

You don’t need blockchain for reputations systems, lol. Stuff like Gnutella and PGP web-of-trust have been around forever. Admittedly, the blockchain can add barriers for some attacks; mainly sybil attacks, but a friend-of-a-friend/WoT network structure can mitigate that somewhat too,

Slashdot had this 20 years ago. So you’re right this is not new.or needing some new technology.

Space is much more developed. Would need ever improving dynamic proof of personhood tests

I think a web-of-trust-like network could still work pretty well where everyone keeps their own view of the network and their own view of reputation scores. I.e. don’t friend people you don’t know; unfriend people who you think are bots, or people who friend bots, or just people you don’t like. Just looked it up, and wikipedia calls these kinds of mitigation techniques “Social Trust Graphs” https://en.wikipedia.org/wiki/Sybil_attack#Social_trust_graphs . Retroshare kinda uses this model (but I think reputation is just a hard binary, and not reputation scores).

I dont see how that stops bots really. We’re post-Turing test. In fact they could even scan previous reputation points allocation there and divise a winning strategy pretty easily.

I mean, don’t friend, or put high trust on people you don’t know is pretty strong. Due to the “six degrees of separation” phenomenon, it scales pretty easily as well. If you have stupid friends that friend bots you can cut them off all, or just lower your trust in them.

“Post-turing” is pretty strong. People who’ve spent much time interacting with LLMs can easily spot them. For whatever reason, they all seem to have similar styles of writing.

I mean, don’t friend, or put high trust on people you don’t know is pretty strong. Due to the “six degrees of separation” phenomenon, it scales pretty easily as well. If you have stupid friends that friend bots you can cut them off all, or just lower your trust in them.

Know IRL? Seems it would inherently limit discoverability and openness. New users or those outside the immediate social graph would face significant barriers to entry and still vulnerable to manipulation, such as bots infiltrating through unsuspecting friends or malicious actors leveraging connections to gain credibility.

“Post-turing” is pretty strong. People who’ve spent much time interacting with LLMs can easily spot them. For whatever reason, they all seem to have similar styles of writing.

Not the good ones, many conversations online are fleeting. Those tell-tale signs can be removed with the right prompt and context. We’re post turing in the sense that in most interactions online people wouldn’t be able to tell they were speaking to a bot, especially if they weren’t looking - which most aren’t.

Do you have a proof of concept that works?

Are they just putting everything on layer 1, and committing to low fees? If so, then it won’t remain decentralized once the blocks are so big that only businesses can download them.

It has adjustable block size and computational cost limits through miner voting, NiPoPoWs enable efficient light clients. Storage Rent cleans up old boxes every four years. Pruned (full) node using a UTXO Set Snapshot is already possible.

Plus you don’t need to bloat the L1, can be done off-chain and authenticated on-chain using highly efficient authenticated data structures.

We also need a solution to fucking despot mods and admins deleting comments and posts left-and-right because it doesn’t align with their personal views.

I’ve seen it happen to me personally across multiple Lemmy domains (I’m a moron and don’t care much to have empathy in my writing, and it sets these limp-wrist morbidly obese mods/admins to delete my shit and ban me), and it happens to many people as well.

Don’t go blaming your inability to have empathy on adhd. That is in absolutely no way connected. You’re just a rude person.

I’m also rude in real life too! 😄

deleted by creator

So much irony in this one

Good job chief 🤡

Freedom of expression does not mean freedom from consequences. As someone who loves to engage on trolling for a laugh online I can tell you that if you get banned for being an asshole you deserve it. I know I have.

- Dude says he is regarded BC reasons in civil manner

- Another dude proceeds to aggressively insult him… I would say not civil.

Who is the asshole here?

limp- wrist morbidly obese

That tells me all I need to know

Yes

I do indeed fuck myself, every day, thanks.

You have that tool, it’s called finding or hosting your own instance.

lemm.ee and lemmy.dbzer0.com both seem like very level-headed instances. You can say stuff even if the admins disagree with it, and it’s not a crisis.

Some of the big other ones seem some other way, yes.

Lemm.ee hasn’t booted me yet? Much like OP, I’m not the most empathetic person, and if I’m annoyed then what little filter that I have disappears.

Shockingly, I might offend folks sometimes!

Just create your own comm.

Communities should be self moderated. Once we have that we can really push things forward.

Self Moderated is just fine. Why do I need to doxx myself to be online? I’m not giving away my birth certificate or SSN just to post on social media that idea is crazy lmao.

My own “we need” list, from a dork who stood up a web server nearly 25 years ago to host weeb crap for friends on IRC:

We need a baseline security architecture recipe people can follow, to cover the huge gap in needs between “I’m running one thing for the general public and I hope it doesn’t get hacked” and “I’m running a hundred things in different VMs and containers and I don’t want to lose everything when just one of them gets hacked.”

(I’m slowly building something like this for mspencer.net but it’s difficult. I’ll happily share what I learn for others to copy, since I have no proprietary interest in it, but I kinda suck at this and someone else succeeding first is far more likely)

We need innovative ways to represent the various ideas, contributions, debates, informative replies, and everything else we share, beyond just free form text with an image. Private communities get drowned in spam and “brain resource exhaustion attacks” without it. Decompose the task of moderation into pieces that can be divided up and audited, where right now they’re all very top down.

Distributed identity management (original 90s PGP web of trust type stuff) can allow moderating users without mass-judging entire instances or network services. Users have keys and sign stuff, and those cryptographic signatures can be used to prove “you said you would honor rule X, but you broke that rule here, as attested to by these signing users.” So people or communities that care about rule X know to maybe not trust that user to follow that rule.

I think the key is building a social information system based on connections we have in real life. Key exchange parties, etc

It’s the only way to introduce a prohibitively high cost to centralized broadcast and reduce the power of these mega-entities

Could you clarify? A sneaker net? Peer to peer?

I think the good news is, regardless of what gets done, people are hungry for real connections and the old internet.

Peer to peer.

I’ve spent a bit of time developing some related ideas, but haven’t had time to start building it.

It’s a bit rough still, but I’d love some feedback! https://freetheinter.net/

honestly, i’ll donate money to whomever can design this and make it scalable.

Plus we can have AI read a post history for us and either make a reputational decision, or highlight in the interface how reputable or disreputable tye user is. You could have it collapse but not delete a user’s comment and you could also lower and raise the bar of acceptibility at anytime. We need better tools than a polished BBS descendant.

check out https://sandstorm.org/ , the project is pretty dead but they had the right idea

deleted by creator

Guillotines are another option.

More will just spawn and take their place.

More heads require more guillotines.

Can we not design guillotines that cut multiple heads at once, thus reducing the head to guillotine ratio?

You’re onto something here.

I guess we could stack the rich on top of each other. That way we wouldn’t even have to modify the guillotine. We’d just have to make sure the blade is extra sharp.

Make the design 4D, and stack them in multiple dimensions, maybe one 4D guillotine is even sufficient?

And for testing purposes, we could try them on the designers!

What a blast!

What really matters is the back-to-nose distance, this gives you the head-per-chop ratio but also drives the max-head-per-chop value which itself depends on the blade weight and max blade height which limited by the ceiling height if inside or the max free standing of the pillars if outside.

This guy guillotines.

Are we foementing revolution or creating a new compression algorithm?

Yes, we’re trying to quantize multiple ceos into a single guillotine operation

Based

The heads yearn for the guillotines

But what about places where heads won’t roll? They deserve a space to be able to access.

actually, if we could remove the sociopaths from power, it would allow academics to over. it’s not that hard to engineer a society where people aren’t like they are now. we’re learned behavior creatures. it’s possible to unlearn what we know now and teach our children to never be this way again.

Hey, that’s us!

If social media becomes decentralized we might even gain traction reversing some of the brainwashing on the masses. The current giants are just propaganda machines. Always have been, but it’s now blatant and obvious. They don’t even care to hide it.

Guns are the only alternative to the tech oligarchy.

You think they can’t buy, manipulate, or just crush decentralized social media? If anything they can do it easily, divide and conquer. FOSS ain’t gonna free you, esp. when the largest contributors to FOSS projects are big corps.

That’s absurd. Large sharp dropped blades, poison, starvation, spears, looped ropes, fire… There are many alternatives available.

We could make a wiki filled with all the options.

But let’s prioritize the non-violent ones first.

We did prioritize non-violent ones, and this is where it got us. The ONLY option is violence.

I’m just talking about how we design the wiki. Gotta be tasteful and present ourselves in the best light.

That’s fair, it’s important in some ways to conceal the hand a bit. We have to make to make the rich as uncomfortable as we are though.

Oh, absolutely. With quicklinks to any old category the user may want to get to fast.

so we just all buy guns and fend for ourselves? we need communities in order to fight fascism, we need to be able to organize and share valuable information with people. is technology the answer to the problem? no its not, but it is part of the answer, and to ignore that is shortsighted.

As to an answers beyond simply getting-armed-and-fostering-healthy-gun-culture-and-education-among-us:

“Practicing mutual aid is the surest means for giving each other and to all the greatest safety, the best guarantee of existence and progress, bodily, intellectually and morally.”

That’s Kropotkin

And then Modern Libs even observe, more verbosely:

“The structures of our state economies are going to matter in terms of protecting democracies, and by that I mean if you look at economies that were based in the kind of small producer economies like New England was vs states like the South and the American West that were always built on the idea of very high capital using extractive methods to get resources out of the land either cotton or mining or oil or water or agri business, those economies always depend on a few people with a lot of money, and then a whole bunch of people who are poor and doing the work for those Rich guys – and that I’m not sure is compatible in terms of governance without addressing the reality that you know if people have more of a foothold in their own communities, they are then more likely to support the kinds of legislation that Community [Education, Healthcare, …] and that may be the future of democracy, if not a national democracy”

Heather Cox Richardson, professor of American history On The Weekly Show with Jon Stewart on Trump’s Win and What’s Next https://youtu.be/D7cKOaBdFWo?t=2139 (time-stamped)

If a Conservative wants me dead, they’re going to have to work and sweat for it. I’m not doing the heavy lifting for them (A Quote I agree with)

Our resulting interactions may seem chaotic and illegible to authority, but it is through that seeming chaos that vastly complex, horizontal, and resilient practices of learning, cooperation, and reciprocity have historically arisen.

By Andrewism https://youtu.be/qkN_nQPpeSU

MASKING REALLY HELPS; Covid, RSV, Flu is a greater threat to marginalized communities. Can’t do organizing without prioritizing precautions.

Show up for your neighbors. The rest will come.

No, we buy guns AND we organize our coalitions, just like the Black Panthers did.

We can’t intellectualize our way out of Proud Boys lynchings anymore, guys.

(Also all the stuff VerticaGG said)

2a is there in case 1a don’t work

Republican solutions to republican problems eh?

Guns work better when you can coordinate Resistance movements news to be coordinated. Running out with a gun like a mad man isn’t going to work.

The only solution guns provide are dead people. You have fallen for the pathetic lie of the right.

Oh. Guns are even better for that.

On the right? They are a lightning rod for criticism and complaints. “All the jobs in our state were taken away and my daughter is dying of an easily curable disease. BUT THOSE FUCKING LIBERALS ARE TRYING TO TAKE AWAY MY SECOND AMENDMENT RIGHTS!!!”

On the left? they are a way to “meet in the middle” on a lot of legislature while also being a great way to villify and target groups. For example, anyone with even a passing understanding of history knows that the Civl Rights Movement was not MLK Jr giving one speech and fist bumping Rosa Parks on the bus. The threat of violence was definitely a factor (beyond that it gets murkier). And people LOVE to argue that Blacks picking up guns is how that was “won”.

You know what else came of that? “That kid is a gangbanger and has a gun. SHOOT HIM. Oh shit, uhm. Fuck it, we’ll just say the toy train looked like a gun”.

And we’ll see that continue. LGBTQ folk will decide they need a gun and you can bet the cops and the chuds will be glad to open fire at protestors because “THEY HAVE A GUN!!!”

And the absolute best part? “Both sides” are fucking delusional if they think their guns are going to accomplish anything against an oppressive government. Cops won’t go near a pistol if a kid’s life is on the line. But they’ll open fire like mel gibson if they think a business is in trouble. Let alone the military with tanks and drones and there will be a lot more “combat footage” to watch online.

If there was ANY chance that The 2nd Amendment could pose ANY threat to a tyrannical government, it would have been destroyed decades ago.

If there was ANY chance that The 2nd Amendment could pose ANY threat to a tyrannical government, it would have been destroyed decades ago.

Somebody almost killed Trump in July. A couple of inches was the difference between a Republican party in chaos just before the election and a party united behind their fascist hamberdler. The way this is going the 2A is going to be your only real defense against modern Nazism so you’d be better off hitting the range and getting proficient with a firearm than you are posting pics with #resist on Instagram.

In many ways, trump’s campaign was bolstered by the image of him standing “defiant” with a fist raised in the air and someone else’s blood all over him.

If trump HAD gotten got? Evil deep state assassination attempt by biden and here is your new candidate that the entire party would rally behind. And democrats would be even more reluctant to say or do anything out of “decorum”.

Because here is the thing: trump isn’t even the problem. He is an evil bastard but he is a symptom of the problem. Project 2025 is what those rapid fire EOs come from. And Project 2025 very much benefits from right wing fascists controlling basically all of social media.

And I will just, once again, ask: What do you think your guns are going to do against a military that is cracking down on you and your buddies as “terrorists”? Because if there was ANY chance of a civilian force posing ANY threat to a government, we would have banned guns back in the late 1700s.

You’re making a lot of unfounded assumptions about what would have happened if Trump were assassinated. No one else has been able to harness MAGA energy the way he has. It’s entirely possible the movement would splinter without its figurehead. We won’t know that until he’s gone. Although it seems less likely now that he presumably has 4 years to enact policy changes and put people in place to keep his agenda moving after his term is up.

There’s plenty of debate to be had on the topic of the effectiveness of guns in civil resistance. All of which can be found in more detail elsewhere than we’re going to be able to cover here. However, suffice it to say that your understanding of resistance in general and guerilla tactics specifically is severely lacking if you’re assuming that this situation would play out as an open confrontation between the US military and some sort of militia. Despite the fact that such a conflict would provide more room for maneuvering than you are giving it credit, that would not be the preferred method of engagement. Generals and other senior officers have to buy groceries and go to the DMV just like everyone else. You pick your targets when and where you can get them. More than anything else, it’s important to acknowledge that in the situation where it becomes necessary to think about these kinds of things in more detail, my guns afford me many more options than your knives (or whatever else you prefer to rely on) would. Unless, of course, you plan on giving up without a fight, in which case we clearly have such different outlooks that additional discussion will not help us find common ground.

Yeah…

Your mass assassinations plan doesn’t work when there is a camera on every corner and traffic light. L Dog was always going to get caught if he hadn’t fled the country within hours of blapping that exec. You are also apparently assuming everyone is Jason Bourne in your fantasy and are a highly trained guerilla fighting force that can blend in and out of everything.

You pick your targets when and where you can get them.

Yeah. The difference between being the chosen one in a young adult novel and actually accomplishing anything of value is what taking out your “target” accomplishes.

And… a great example of that is Palestine. For the sake of simplicity, let’s call what Hamas did “attacking a target”. What was the outcome of that? Israel had “justification” to engage in mass ethnic cleansing for over a year.

Unless, of course, you plan on giving up without a fight, in which case we clearly have such different outlooks that additional discussion will not help us find common ground.

I believe in fighting for change in ways that can actually protect others and accomplish things. Rather than fantasizing about living in a Call of Duty commercial and just painting an even bigger target on the backs of the groups I claim to be helping.

If you or the other “Buy a gun, it is the only thing you can do. I hear Fred’s on 4th street have great deals on assault rifles!” folk had ACTUALLY engaged in any activism whether peaceful or otherwise you would have long since had it explained to you: YOU DO NOT BRING A FUCKING GUN TO A PROTEST. Because the moment the other side sees it? They open fire. Because cops will give a bottle of water to the white kid with an assault rifle looking for some n*****s to kill. They’ll fucking murder anyone who looks even slightly brown if they have a bulge in their jacket pocket.

And… a great example of that is Palestine. For the sake of simplicity, let’s call what Hamas did “attacking a target”. What was the outcome of that? Israel had “justification” to engage in mass ethnic cleansing for over a year.

You put justification in quotes here, and I think you clearly understand why. Netenyanhu propped up hamas as the de facto government specifically in order to ensure a more militant party would give israel the necessary “justification” to attack the people there. So, even their governance, and that attack itself, is traceable to israel’s state violence. A minor note, but an important one, I think. And I think one which requires more thought than just like, pointing to that and then saying “See, I told you, violence doesn’t work, and is bad, and israel wants it!”, because israel’s obviously not an overly rational state which is actually functional, either for it’s people or for it’s goals.

More broadly though, it’s not necessary at all for people to have guns, in order for cops to kill them. Cops can invent any number of reasons to kill someone in their day to day. The gun is something you just see in the news media a lot because it’s incredibly common in america, and especially common in the hoods where cops go out and kill people in larger numbers. Again, we can see that as an extension of a context, created by the state, which has naturally created violence. Partially through the valuable, and illegal, property, mostly in the form of drugs, which must be protected through extralegal means, i.e. cartels and gangs, but also just naturally as a result of police violence in those places as an extension of that, which is an intentional decision to create by the ruling class. It’s a way to create CIA black budgets, it’s a way to incarcerate and vilify your political opponents at higher rates, etc. You can’t be intolerant to the idea of guns as a blanket case, in that context, because it’s a totally different kind of context, and is one which is created by the state.

I would maybe also make the point that a protest is incentive enough against killing people, because it would be widely known and televised as a massacre in the media. You know, just gunning people down in the street, en masse. That line is sort of, becoming less clear over time, as the government seems to be more and more willing to condone that, if not outright do that, but I don’t really think that if, say, everyone in the BLM riots was armed, the cops would just start randomly firing into the crowd. They’d be hopelessly outnumbered, for one, so that’s a pretty clear reason for the police not to just start sputtering off rounds like a bunch of idiots, but you’d also probably see a protracted national guard response over the course of the next several weeks, which nobody really wants to deal with, both in terms of the media response and just the basic type of shit that would happen.

You also have several extrapolations you can make from just that happening in the first place, even though it never would. Like, the kind of city which could get up to that, in america, would maybe reveal something incredibly uncomfortable to the ruling institutions about that particular city and its political disposition and potentially that could be extrapolated to the entire country. Most places don’t get to that point because they reach civil war before that, which is kind of more along the lines of what the preceding commenter is talking about. More along the lines of, say, IRA tactics.

Which is all to say, that this is something which is shaped entirely by the government’s intentional responses and the contexts that they create. When they decide to escalate, that should be seen, naturally, as being on them, and not on your average person. I think what the previous commenter is trying to say, with a good faith reading, is that we are probably due, in the next 4 years and perhaps beyond, for an escalation. I don’t think that’s really a morally great thing, or a good context, but I do think they’re potentially right based on how things shake out, and I think that people should probably come to terms with that even as we try to avoid it.

Edit: Also I forgot to note this, but this isn’t really a disagreement in core ideals, but just of tactics. Dual power isn’t so much a deliberate choice of tactic so much as it should just be a certainty, being that both sides of this debate are mutually beneficial to one another. If you have, or can place, a more reasonable politician in office, either through violence (highly unusual, but does happen occasionally if the dice reroll lands well enough), or through the political system itself, then that reasonable politician is just that, more reasonable. i.e. more likely to accomplish goals which are desirable to any violent guerillas. Likewise, the pressure that violent guerillas exert can be seen as a kind of abstract economic cost constantly being leveraged against unreasonable political powers, in favor of reasonable elements of that political system.

The main point against this, is that the united states is currently so unreasonable, politically, that it’s functionally impossible to bargain with in really any way. Any violence, under such a political system, one which refuses any attempt at change, is seen as kind of ultimately meaningless. But I think that’s maybe also part of a broader point about how people just generally feel, understandably, incredibly pessimistic about the future, and are sort of retreating back into a kind of survival mode. Especially, I think, because they’ve been made to feel totally responsible for the weight of the world, when ultimately the decision of the political power to retaliate and do mass violence is, as previously stated, both inevitable, and entirely their own decision, that they must be held responsible for, rather than the people.

And we’ll see that continue. LGBTQ folk will decide they need a gun and you can bet the cops and the chuds will be glad to open fire at protestors because “THEY HAVE A GUN!!!”

Exactly, the presence of a weapon just gives them a reason to pull the “THEY’RE COMIN RIGHT FOR US” bullshit from South Park Season Fucking One.

It might be the only path forward.

I mean humanity survived thousands of years without any social media at all…

Gonna disagree here.

Humans have always had “social media”, but it’s not been directed by a cadre of oligarchs until recently.

I mean shit, humans have been sitting around the campfire telling stories to each other going all the fucking way back to forever. Sure, a campfire story isn’t a tweet, but for our monkey brains it’s essentially the same thing: how we interact with our social groups and learn what’s going on around us.

The problem is that the campfire stories couldn’t be manipulated into making your cavemen neighbors hate the other half, because half of them were totally pro rabbit fur while you’re pro squirrel fur.

You absolutely can do that and worse now, so while we’ve always had social media, we just simply never had anyone with enough control to make an entire society eat each other because of it’s influence.

You certainly could tell cavemen stories to manipulate them, back then.

The difference was you could only reach one campfire at a time. Nowadays the whole Internet is one campfire, metaphorically.

Lol chimpanzees kill each other in literal wars with torture, kidnapping, extortion, terrorism and more, and you think a caveman never thought of lying about the enemy group?

The previous post didn’t talk about inter-campfire relations. It talked about relations between people in one campfire. Relations with outsiders have always been fucky. It’s a miracle how the EU even came to be in the first place with how different everything/everyone is.

There’s a big difference between sitting around a fire telling stories. And sending pseudonymous click-baity messages (I’m slightly exaggerating) across the globe.

As it’s not guaranteed anymore: Have you sit around a fire with friends? IME it’s so much more fulfilling and less prone to hate. Healthier (apart of the smoke). There’s so much more to communication than text messages.

There’s a big difference between sitting around a fire telling stories. And sending pseudonymous click-baity messages (I’m slightly exaggerating) across the globe.

Totally agree, except that regardless of how smart a person is…all our brains are pretty dumb and easy to fool. If reading stupid click-bait messages on the internet triggers the same connections as having a talk around the fire, then to our brains it’s literally the same. And it has all the same things, just more so. Is someone more likely to lie to you for their own ends on the internet? Probably, but your best friend would like to your face if their mental maths figured that lying would benefit them more than telling the truth. Not saying that society is doomed because we’re all inherently selfish and don’t care about the welfare of anyone past ourselves. But to say that social media doesn’t fill the same function as village gatherings, the town crier exclaiming news where you might not get word, or gathering around the fire with Oogtug and Feffaguh to tell eachother about your day…in the current era, when people are more socially isolated than ever? Nah. Doesn’t track for me.

all our brains are pretty dumb and easy to fool.

Absolutely, but I think that when we’re talking to actually smart people in person we at least subconsciously more likely believe the person that actually has to say something (i.e. really knows something we don’t). With social media a lot of these communication factors are missing, so if the text sounds smart, we may believe it. Sure you can fake and lie, etc. but I think (going back in time) we have a good instinct for people that may help us in any way i.e. through knowledge where to find food, find secure shelter etc. stuff that helps our survival, which in the end for humans is basically good factual knowledge that helps the survival of the species as a whole.

Today our attention spans are reduced to basically nothing to a large part because of social media promoting emotional (unfortunately mostly negative/anxiety/anger) short messages (and ads of course) that reinforce whatever we believe which likely strengthens bad connections in the brain.

Also the sheer mass of information is very likely not good for us. I.e. mostly nonfactual information, because well, there’s way more people that “have heard about something” than actually researched and gone down to the ground to get the truth (or at least a good model of it).

This all mixed, well doesn’t give me a positive outlook unfortunately…

I keep putting off replying to this, because it deserves a good, well thought reply. I’ve not got the mental space for it.

Suffice to say, I think what you said tracks with what I was stabbing at. And I agree. I’ll keep this as unread and maybe come back over the weekend if I can get my thoughts together.

There was not a 8 billion people supply chain back then.

Yeah, which actually underlines my point even. We weren’t “designed” for connecting with everyone around the world. Evolutionary there were smaller groups, sometimes having contact with other groups.

Today we can just connect with our bubbles (like here on lemmy) and get validated and reinforce our beliefs independently if they are right or wrong (mostly factually). As we see this doesn’t seems to be healthy for most people. In smaller circles (like scientific community) this helps, but in general… Well I don’t think I have to explain the situation on the world (and especially currently in the USA) currently…

This is the better path forward… That everyone just gets so sick of it that they drop it - I’ve actually seen a lot of that among my own friends over the last week (and we aren’t from America even). But the right wingers will never drop it because it’s their community and echo chamber, and that’s where the further dangers to democracy come into play when they’re all in the sandbox together without parents…

Let’s call it by it’s name: neofeudalism/technofeudalism

deleted by creator

In the same way that email has been decentralized from the get go, social media could have been equally decentralized, and I don’t mean in the older php forums, but in a different way that would allow people to reconnect with others and maintain contacts.

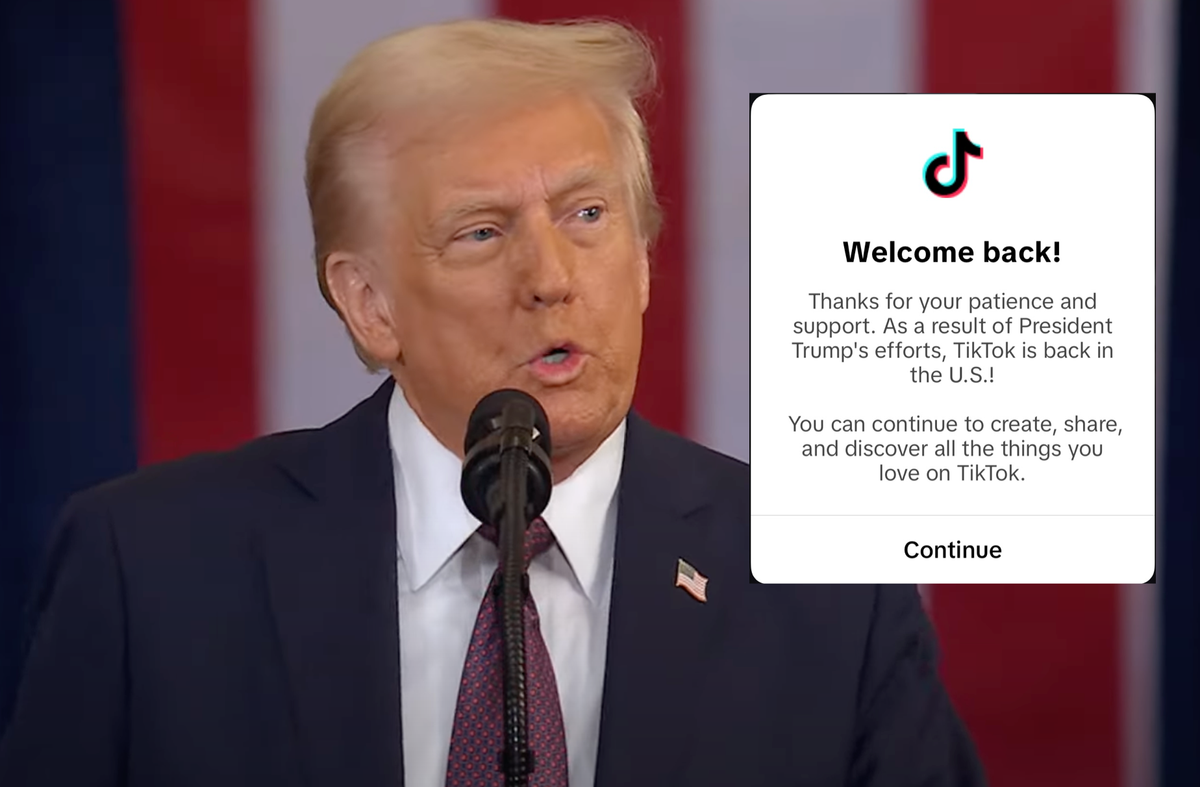

I have a feeling this place and other decentralized social medias will be banned in the near future. Look at what’s happening to TIktok. You either bend the knee or you get axed. It’s why the other social media giants bent the knee. They understand the writing on the wall. There’s more going on behind the scenes that they don’t share with us. I think we’re sort of watching a quiet coup.

1000% agree. There is no freedom but the freedom that we build together.

if 100% is completely agreeing, what’s 1000%?

completely agreeing, 10 times

Preaching to the choir!

It might be good to reiterate (in part) why we’re all in here.

deleted by creator