Technically they still are, but since you don’t have a hand on the seed, practically they are not.

- 0 Posts

- 17 Comments

What happened to my computers being reliable, predictable, idempotent ? :'(

No idea what krysp is, but audio is flawless in my case. Granted, only really used on x11 so far. I use vesktop flatpak on debian sid.

Give Vesktop a try. It’s a clone of discord and they say they support wayland for streaming just fine. It works fine but I haven’t tried the wayland streaming thing yet.

Ubuntu is a fork of unstable Debian packages

And where do you think debian stable packages come from exactly ?..

it’s basicaly the exact same thing. In both case :

- At some point freeze unstable (snapshot unstable in case of ubuntu),

- fix bugs found in the frozen set of packages,

- release as stable.

2·7 months ago

2·7 months agoNot if root account is disabled. Which is by default on Ubuntu and Debian . You’d need

sudo su -but well… No sudo left you know.

2·7 months ago

2·7 months agoI tried to convert Debian to Ubuntu by replacing the Debian repos in apt with Ubuntu’s and following with dist-upgrade

Shouldn’t it work though ? Or be close to work with the appropriate options passed down to dpkg

6·7 months ago

6·7 months agoIt doesn’t work with root disabled.

The way to fix this is to boot in bash recovery where you land a root shell. From there you can hopefully

apt install sudoif deb file is still in cache. If not, you have to make network function without systemd forapt installto work. Or, you can get sudo deb file and all missing dependencies from usb stick andapt installthem from fs. Or just enable root, give it a password and reboot so you cansu -andapt install sudo

12·7 months ago

12·7 months agoaptsomething that ended up removingsudo. No more admin rights.- used

rsyncto backup pretty much everything in / , with remove source option… findwith-deleteoption miss positioned. It deleted stuff before finding matching patternchown/chmodon/binand/or/usr/bin- Removed everything in

/etc

Also I’ve read that C# is C++++ (like put those + on 2x2 table, which in turns ressemble a #)

13·9 months ago

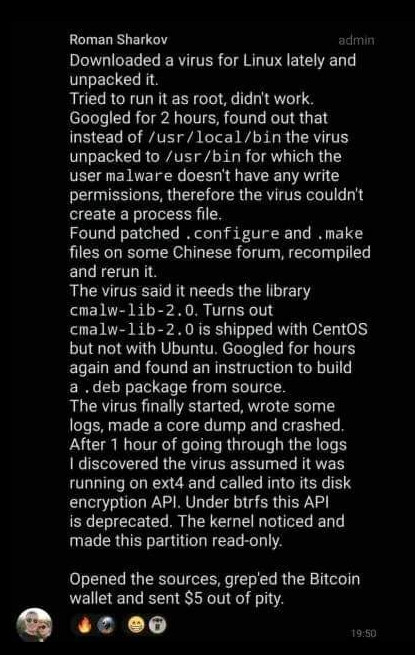

13·9 months agoHere is why :)

7·9 months ago

7·9 months agoExcept that games are broken at release and need day1 patch in order to work. Although you will ship BD, the day update servers are taken down, your physical copy won’t allow you to play the game either.

The only question I have is : Is torrenting game patchs / updates concidered piracy as well ? If it is, we are definitely doomed.

4·10 months ago

4·10 months agoisn’t that kind of what AUR is, and exactly what people love about arch ?

Snap forces updates, and you cannot disable them. So if you use snaps, I guess you can stop worrying and keep going with your usual apt routine.

if you do not provide a root password during install, the default user is in sudoer.

You are taking all my words way too strictly as to what I intended :)

It was more along the line : Me, a computer user, up until now, I could (more or less) expect the tool (software/website) I use in a relative consistant maner (be it reproducing a crash following some actions). Doing the same thing twice would (mostly) get me the same result/behaviour. For instance, an Excel feature applied on a given data should behave the same next time I show it to a friend. Or I found a result on Google by typing a given query, I hopefully will find that website again easily enough with that same query (even though it might have ranked up or down a little).

It’s not strictly “reliable, predictable, idempotent”, but consistent enough that people (users) will say it is.

But with those tools (ie: chatGPT), you get an answer, but are unable to get back that initial answer with the same initial query, and it basically makes it impossible to get that same* output because you have no hand on the seed.

The random generator is a bit streached, you expect it to be different, it’s by design. As a user, you expect the LLM to give you the correct answer, but it’s actually never the same* answer.

*and here I mean same as “it might be worded differently, but the meaning is close to similar as previous answer”. Just like if you ask a question twice to someone, he won’t use the exact same wording, but will essentially says the same thing. Which is something those tools (or rather “end users services”) do not give me. Which is what I wanted to point out in much fewer words :)