freamon

Mostly just used for moderation.

Main account is https://piefed.social/u/andrew_s

- 2 Posts

- 19 Comments

5·7 months ago

5·7 months agoWell, there’s good news and bad news.

The good news is that Lemmy is now surrounding your spoilers with the expected Details and Summary tags, and moving the HR means PieFed is able to interpret the Markdown for both spoilers.

The bad news:

It turns out KBIN doesn’t understand Details/Summary tags (even though a browser on it own does, so that’s KBIN’s problem).

Neither PieFed, or KBIN, or MS Edge looking at raw HTML can properly deal with a list that starts at ‘0’.

Lemmy is no longer putting List tags around anything inside the spoilers. (so this post now looks worse on KBIN. Sorry about that KBIN users)

9·7 months ago

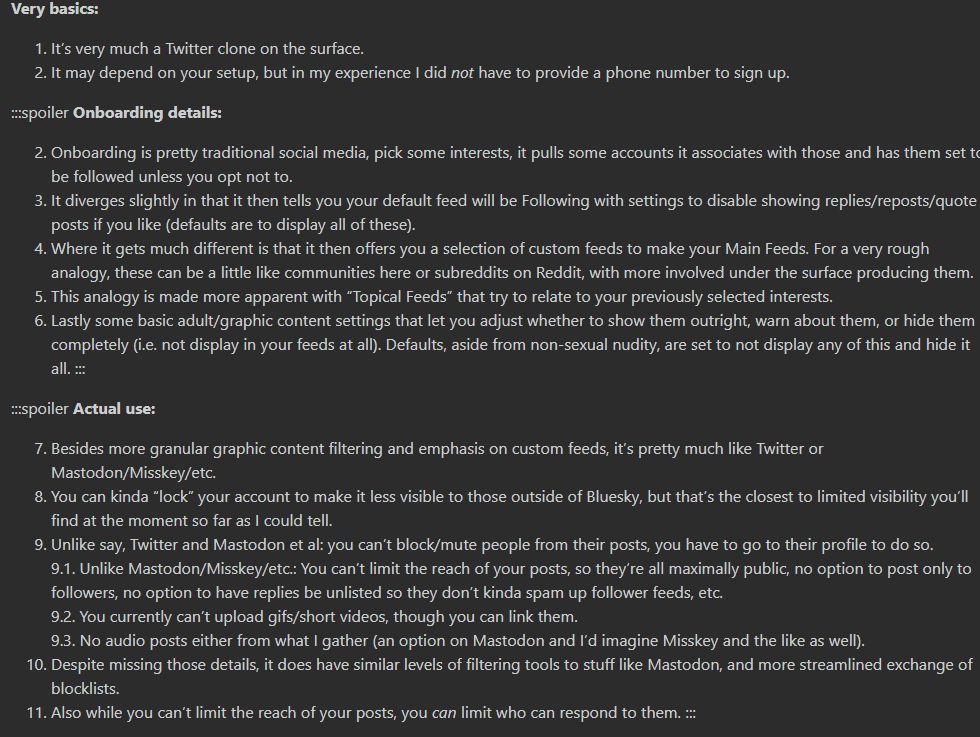

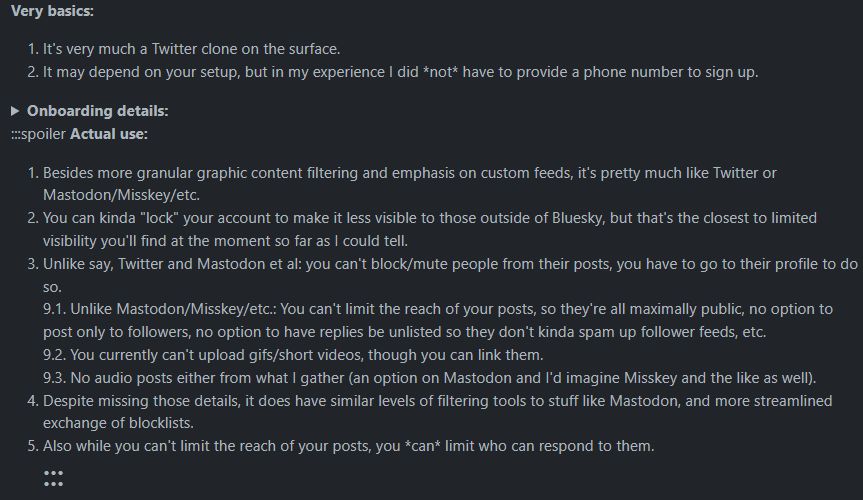

9·7 months agoFirstly, sorry for any potential derailment. This is a comment about the Markdown used in your post (I wouldn’t normally mention it, but consider it fair game since this is a ‘Fediverse’ community).

The spec for lemmy’s spoiler format is colon-colon-colon-space-spoiler. If you miss out the space, then whilst other Lemmy instances can reconstitute the Markdown to see this post as intended, Lemmy itself doesn’t generate the correct HTML when sending it out over ActivityPub. This means that other Fediverse apps that just look at the HTML (e.g. Mastodon, KBIN) can’t render it properly.

Screenshot from kbin:

Also, if you add a horizontal rule without a blank line above it, Markdown generally interprets this as meaning that you want the text above it to be a heading. So anything that doesn’t have the full force of Lemmy’s Markdown processor that is currently trying to re-make the HTML from Markdown now has to deal with the ending triple colons having ‘h2’ tags around it.

Screenshot from piefed:

(apologies again for being off-topic)

2·7 months ago

2·7 months agoUpdate: for LW, this behaviour stopped around about Friday 12th April. Not sure what changed, but at least the biggest instance isn’t doing it anymore.

3·7 months ago

3·7 months agoI’ve been coerced into reporting it as bug in Lemmy itself - perhaps you could add your own observations here so I seem like less of a crank. Thanks.

14·7 months ago

14·7 months agoI’ve since relented, and filed a bug

7·7 months ago

7·7 months agoYeah, that’s the conclusion I came away with from the lemmy.ca and endlesstalk.org chats. That’s it due to multiple docker containers. In the LW Matrix room though, an admin said he saw one container send the same activity out 3 times. Also, LW were presumably running multiple containers with 0.18.5, when it didn’t happen, so it maybe that multiple containers is only part of the problem.

31·7 months ago

31·7 months agoWhen I’ve mentioned this issue to admins at lemmy.ca and endlesstalk.org (relevant posts here and here), they’ve suggested it’s a misconfiguration. When I said the same to lemmy.world admins (relevant comment here), they also suggested it was misconfig. I mentioned it again recently on the LW channel, and it was only then was Lemmy itself proposed as a problem. It happens on plenty of servers, but not all of them, so I don’t know where the fault lies.

234·7 months ago

234·7 months agoA bug report for software I don’t run, and so can’t reproduce would be closed anyway. I think ‘steps to reproduce’ is pretty much the first line in a bug report.

If I ran a server that used someone else’s software to allow users to download a file, and someone told me that every 2nd byte needed to be discarded, I like to think I’d investigate and contact the software vendors if required. I wouldn’t tell the user that it’s something they should be doing. I feel like I’m the user in this scenario.

10·7 months ago

10·7 months agoWe were typing at the same time, it seems. I’ve included more info in a comment above, showing that they were POST requests.

Also, the green terminal is outputting part of the body of for each request, to demonstrate. If they weren’t POST requests to /inbox, my server wouldn’t have even picked up them.

EDIT: by ‘server’ I mean the back-end one, the one nginx is reverse-proxying to.

28·7 months ago

28·7 months agoThey’ll all POST requests. I trimmed it out of the log for space, but the first 6 requests on the video looked like (nginx shows the data amount for GET, but not POST):

ip.address - - [07/Apr/2024:23:18:44 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world" ip.address- - [07/Apr/2024:23:18:44 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world" ip.address - - [07/Apr/2024:23:19:14 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world" ip.address - - [07/Apr/2024:23:19:14 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world" ip.address - - [07/Apr/2024:23:19:44 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world" ip.address - - [07/Apr/2024:23:19:44 +0000] "POST /inbox HTTP/1.1" 200 0 "-" "Lemmy/0.19.3; +https://lemmy.world"If I was running Lemmy, every second line would say 400, from it rejecting it as a duplicate. In terms of bandwidth, every line represents a full JSON, so I guess it’s about 2K minimum for the standard cruft, plus however much for the actual contents of comment (the comment replying to this would’ve been 8K)

My server just took the requests and dumped the bodies out to a file, and then a script was outputting the object.id, object.type and object.actor into /tmp/demo.txt (which is another confirmation that they were POST requests, of course)

312·7 months ago

312·7 months agoI can’t re-produce anything, because I don’t run Lemmy on my server. It’s possible to infer that’s it’s related to the software (because LW didn’t do this when it was on 0.18.5). However, it’s not something that, for example, lemmy.ml does. An admin on LW matrix chat suggested that it’s likely a combination of instance configuration and software changes, but a bug report from me (who has no idea how LW is set up) wouldn’t be much use.

I’d gently suggest that, if LW admins think it’s a configuration problem, they should talk to other Lemmy admins, and if they think Lemmy itself plays a role, they should talk to the devs. I could be wrong, but this has been happening for a while now, and I don’t get the sense that anyone is talking to anyone about it.

Oh, right. The chat on GitHub is over my head, but I would have thought that solving the problem of instances sending every activity 2 or 3 times would help with that, since even rejecting something as a duplicate must eat up some time.

Yeah. It seems like the kind of community he’d post in. I think there was some drama between him and startrek.website, resulting in him or the admins deleting the account.

(same person on different account, just so I can preview what the table will look like)

Hmmm. This might not help you much. That community was launched on 2024-01-22, with a page full of posts, so by the time the crawler picked up on it, it was already at 501 subs, 23 posts.

It lost 15 posts on 2024-02-12 (Stamets?).

There’s data missing from 2024-02-26 - 2024-03-01 (this data is from the bot at !trendingcommunities@feddit.nl; I think those days are missing because I changed it from measuring Active Users Month (AUM) to Active Users Week (AUW)).

In terms of active users, the jump on 2024-03-04 is due to lemmy.world ‘upgrading’ to 0.19.3.date subs aum posts 2024-01-23 501 71 23 2024-01-24 601 103 27 2024-01-25 643 125 32 2024-01-26 663 130 33 2024-01-27 668 130 33 2024-01-28 668 130 33 2024-01-29 670 130 33 2024-01-30 670 130 33 2024-01-31 670 130 33 2024-02-01 670 130 33 2024-02-02 670 130 33 2024-02-03 672 130 33 2024-02-04 672 130 33 2024-02-05 672 130 33 2024-02-06 672 130 33 2024-02-07 673 140 34 2024-02-08 678 144 35 2024-02-09 681 150 36 2024-02-10 681 150 36 2024-02-11 698 155 38 2024-02-12 703 156 23 2024-02-13 703 156 23 2024-02-14 711 160 24 2024-02-15 711 160 24 2024-02-16 711 160 24 2024-02-17 713 160 24 2024-02-18 713 160 24 2024-02-19 714 160 24 2024-02-20 715 160 24 2024-02-21 715 160 24 2024-02-22 715 160 24 2024-02-23 715 119 24 2024-02-24 715 77 24 2024-02-25 716 48 24 [data missing]

date subs auw posts 2024-03-02 718 2 25 2024-03-03 722 2 25 2024-03-04 726 129 26 2024-03-05 732 200 27 2024-03-06 748 397 29 2024-03-07 754 437 30 2024-03-08 755 453 31 2024-03-09 756 455 31 2024-03-10 770 576 33 2024-03-11 779 631 34 2024-03-12 782 651 35 2024-03-13 785 583 36 2024-03-14 787 473 36 2024-03-15 797 476 37 2024-03-16 802 493 39 2024-03-17 807 542 40 2024-03-18 812 650 41 2024-03-19 815 431 42 2024-03-20 817 400 43 2024-03-21 819 421 44 2024-03-22 819 437 44 2024-03-23 822 484 46 2024-03-24 829 533 47 2024-03-25 831 384 48 2024-03-26 833 395 49 2024-03-27 837 431 50 2024-03-28 840 462 51 2024-03-29 849 416 52 2024-03-30 851 390 53 2024-03-31 854 414 53 2024-04-01 861 466 56 2024-04-02 865 560 57 2024-04-03 868 473 58

2·9 months ago

2·9 months agoI know for sure that Lemmy won’t, it’s likely the same for Mastodon.

I was wrong about not being able to WebFinger your account - I still had the @ at the beginning when I trying. Doing it properly:

curl --header 'accept: application/json' https://mostr.pub/.well-known/webfinger?resource=acct:910af9070dfd6beee63f0d4aaac354b5da164d6bb23c9c876cdf524c7204e66d@mostr.pub | jq .gets the right response.

However, I’m logged into lemmy.world and it still couldn’t get your account. At a guess, it’s because there’s a 20 character limit on usernames.

7·9 months ago

7·9 months agoLemmy instances won’t search outside of their own databases if you’re not logged in.

But if you are, what it does can be recreated on a command-line by doing:

curl --header 'accept: application/json' https://nerdica.net/.well-known/webfinger?resource=acct:nate0@nerdica.net | jq .This shows that your profile is at https://nerdica.net/profile/nate0. Lemmy puts all users at a /u/, but using webfinger means that other fediverse accounts don’t have to follow the same structure. For lemmy.world, you’re at https://lemmy.world/u/nate0@nerdica.net in the same way that a mastodon user is at e.g https://lemmy.world/u/MrLovenstein@mastodon.social.

edit:

However if you webfinger your mostr.pub account, you get:{"error":"Invalid host"}so any ActivityPub instances will only ever be able to find you if you’ve interacted with them in some way to get a database entry. Edit: also, I tried to do this again, thought I’d try the npub1 account as well, but got Gateway Timeouts, so there’s a bit of jankiness going on too.

Hmmm. Speaking of Fediverse interoperability, platforms other than yours (Pandacap) typically arrange things so that

https://pandacap.azurewebsites.netwas the domain, and something likehttps://pandacap.azurewebsites.net/users/lizard-sockswas the user, but Pandacap wants to usehttps://pandacap.azurewebsites.netfor both. Combined with the fact that it doesn’t seem to support /.well-known/nodeinfo means that no other platform knows what software it’s running.When your actor sends something out, it uses the id

https://pandacap.azurewebsites.net/, but when something tries to look that up, it returns a “Person” with a subtly different id ofhttps://pandacap.azurewebsites.net(no trailing slash). So there’s the potential to create the following:https://pandacap.azurewebsites.net/sends something out.https://pandacap.azurewebsites.net)https://pandacap.azurewebsites.net/sends else something out. Instance looks in it’s DB, finds nothing, so looks it up and tries to create it again. The best case is that it meets a DB uniqueness constraint, because the ID it gets back from that lookup does actually exist (so it can use that, but it was a long way around to find it). The worst case - when there’s no DB uniqueness constraint -is that a ‘new’ user is created every time.If every new platform treats the Fediverse as a wheel that needs to be re-invented, then the whole project is doomed.