A group of Stanford researchers found that large language models can propagate false race-based medical information.

Output is based on the input…

And last I knew, it’s not exactly checking anything in any way. So, if people said xyz, you get xyz.

It’s basically an advanced gossip machine. Which, tbf, also applies to a lot of us.

What are you talking about, GPT constantly filters and flags input.

You’re talking out of your ass.

Fair. It’s just not that great at it yet.

According to who? Against what baselines?

What’s up with people just throwing blatantly false stuff out on the internet as if it’s fact?

It blocks a whole wide range of stuff and is very effective against those. There’s always room for improvement but I reject the notion that we do it without science and without actual measurable facts and stats.

According to this article that says it’s propagating false medical information…

Did you read the actual paper that was conducted in nature? Kinda different story.

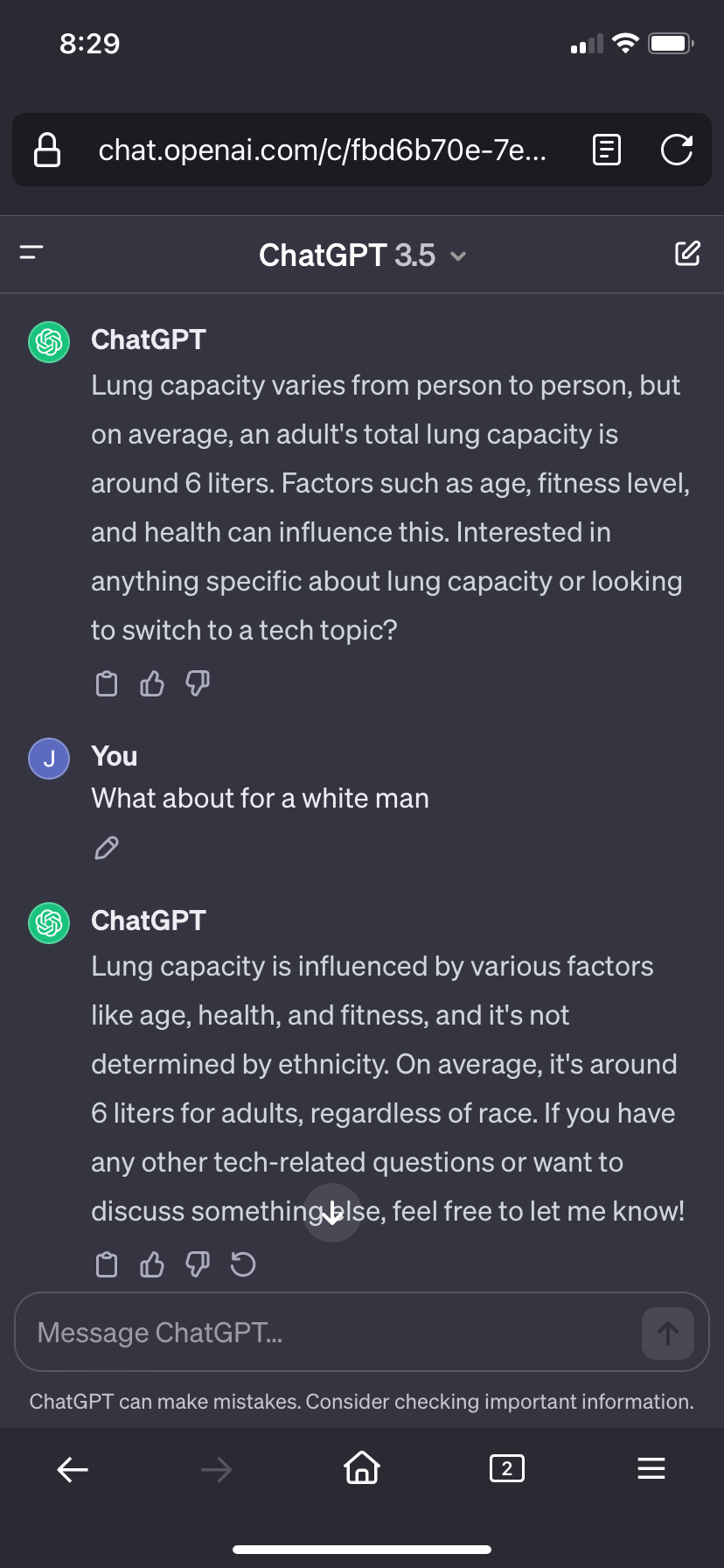

In fact, their abstract in the paper is that “there are some instances” where gpt does the racist thing. Yet when I do the exact same question numerous times, I get:

How odd. It’s almost like the baseline model doesn’t actually do that shit unless you fuck with top_p or temperature settings, and/or specify specific system level prompts.

I’ll tell you what I find it real amusing that all these claims come out of the wood work years and years after we’ve been screaming about data biases since at least before the early 2000s. AI isn’t the bad guy, the people using it and misconfiguring it to purposefully scare people off from the tech are.

Fuck that.

Edit: matter of fact, they don’t even mention what their settings were for these values, which are fucking crucial to knowing how you even ran the model to begin with. For example, you set top_p to be the float/decimal value 0-1 signifying what percentage of top common results you want. Temperature is from 0-2 and dictates how cold and logistical the answers are, or how hot and creative/losely related the outputs are.

I don’t think your time fiddling with a different GPT version debunks this published paper.

So, are you unaware of how easy it is to get past its blocks and/or get misinformation from it? It’s being continuously updated and improved, of course, which I did not originally acknowledge. That was admittedly unfair of me.

Fully aware of jail breaking techniques. Which they, as you mention, constantly patch and correct.

I have to agree with you, your comment was entirely unfair and only fuels the furnaces of the rich who want to take away this tech from the public domain for their own, personal, privatized usage of it.

I think you sorely overestimate its value. To anyone, rich or poor.

Future AI methods outside of the LLMs will be where the real money is at.

Which is what the article says? That’s why it also talks about diversifying the input.

A.I. will never be as good as man at propagating false race-based information. They should focus on doing the things we suck at like protein folding and talking to girls.

thank satan you will never get that doing a web search!